It’s no secret that our world is becoming increasingly inundated with data. All you have to do is look at the sheer number and types of connected devices to understand that a massive amount of data is being generated today.

And believe it or not, it’s likely to grow significantly over the next few years. But many people don’t realize a couple of important facts about that data. First, the wide range of devices produces myriad data types that must somehow all be correlated and normalized, so that it can all be analyzed together. That means different data types, different fields, different mapping, etc. No small task!

Second, not all data has the same value. Think about it; if you’re an e-commerce company, and you have performance data on the speed of credit card transactions, or the performance and availability of your site, you’d likely deem that as business-critical. But would you value data from your email server as highly? And then there’s logs from your network attached storage (NAS) device, which may be telling you that a mid-day snapshot failed. While that may certainly be important to know, it may generate that identical log 100 times; do you need them all, or just one? And what about a dataset that’s missing important fields, to the point where it’s essentially useless? So, given all of this, I think we can agree that not all data is created the same.

But why is all of this important? For starters, the more data you have in your consideration set, the harder it is to draw any conclusions ― or at least draw them in a reasonable timeframe. But then couple that with the varying degrees of value discussed above and you can quickly see that most of that data is simply noise. Noise that’s getting in the way of your ability to assess your valuable data.

But there’s a second, related, reason this is important. It has to do with how data analytics vendors determine their pricing. For the overwhelming majority of them, it’s based on the amount of data ingested; while that model certainly makes sense, the problem is that, despite the fact that different types of data have vastly varying degrees of value, these vendors assign all data the same cost.

These two reasons, alone, make it absolutely essential to cut through the noise and solely focus on the data that’s meaningful. This is where data orchestration platforms have come into play, to reduce the volume of data that gets sent to analytics platforms to save costs. They also enrich, normalize, and optimize the data to make all of those disparate data types work together and therefore make the entire dataset more valuable.

While they do their job well, the problem is that they really only deliver a partial solution. While they certainly help reduce your data analytics costs and make the data more actionable, they also require additional infrastructure to run them. That, in turn, adds costs ― OPEX as well as CAPEX.

In addition to these hard costs, most orchestration platforms also add network latency, since now there’s an additional layer of analysis for the data to undergo, prior to reaching the analytics platform for assessment. That latency, of course, can lead to unplanned costs from network downtime, malware infections, and other negative events that carry significant costs of their own. Of course, many of these costs could be avoided, or at least minimized, if systems had been in place to discover and alert on potential issues in real time.

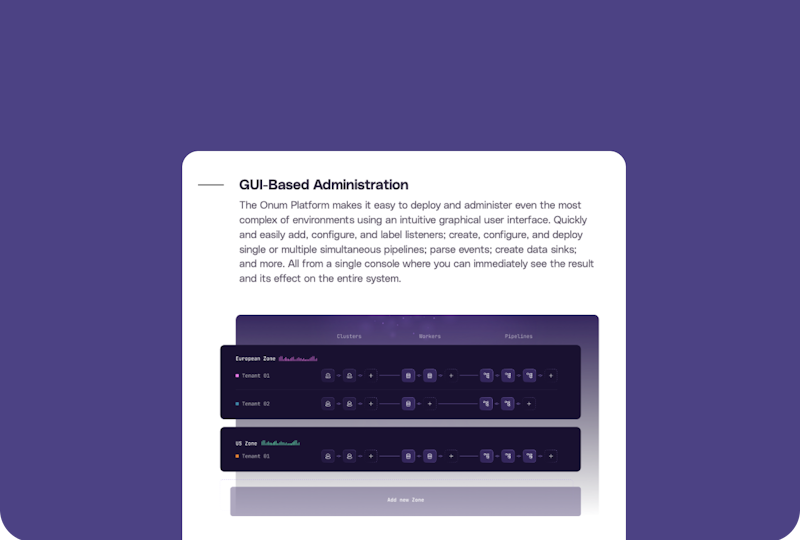

It seems like such a basic concept, it makes you wonder why orchestration platforms don’t collect and observe data in real time. Well, the truth is, it’s not a “feature” that can just be added to an existing product; it has to be architected from the ground up to specifically do this. That’s because real-time requires data collection to be done at the device level, as close as possible to where the data is being produced. Of course, many orchestration vendors say that they, in fact, do this, but can they collect all data from all devices across the hybrid network, and observe all of that data together? If not specifically architected to do so, they’ll need to introduce two disparate products ― one for physical, on-premises assets and another for cloud-based devices. And remember, almost no organizations have a single cloud environment. There are public and private clouds, on a wide range of platforms that don’t inherently work together. So, you need one platform that can seamlessly integrate with all of them to unify your data and observe it as a single dataset.

So, bottom line, you really need both. A strong data orchestration solution is necessary to ensure that you can make the best possible decision based on a comprehensive, enriched, optimized set of your data, rather than just a portion of it, while also reaping significant savings by filtering and reducing it to get rid of the one-third segment of it that has no value. In addition, real-time observation of your data is absolutely essential, since it buys you vital time to take decisive action when there are warning signs in the data.

Each of these technologies provides tremendous value when you’re trying to make sense of your growing deluge of data. But layering them creates invaluable synergies for a robust, comprehensive solution to help you make timely, informed decisions.

Related resources